As building Generative AI becomes more mainstream, there are two NVIDIA GPU models that have risen to the top of every AI builder’s infrastructure wishlist—the H100 and A100. The H100 was released in 2022 and is the most capable card in the market right now. The A100 may be older, but is still familiar, reliable and powerful enough to handle demanding AI workloads.

There’s a lot of information out there on the individual GPU specs, but we repeatedly hear from customers that they still aren’t sure which GPUs are best for their workload and budget. H100s look more expensive on the surface, but can they save more money by performing tasks faster? A100s and H100s have the same memory size, so where do they differ the most?

With this post, we want to help you understand the key differences to look out for between the main GPUs (H100 vs A100) currently being used for ML training and inference.

Technical Overview

| |

A100 SXM |

H100 PCIe |

H100 SXM |

| FP64 |

9.7 teraFLOPS |

26 teraFLOPS |

34 teraFLOPS |

| FP64 Tensor Core |

19.5 teraFLOPS |

51 teraFLOPS |

67 teraFLOPS |

| FP32 |

19.5 teraFLOPS |

51 teraFLOPS |

67 teraFLOPS |

| TF32 Tensor Core |

312 teraFLOPS* |

756 teraFLOPS* |

989 teraFLOPS* |

| BFLOAT16 Tensor Core |

624 teraFLOPS* |

1,513 teraFLOPS* |

1,979 teraFLOPS* |

| FP16 Tensor Core |

624 teraFLOPS* |

1,513 teraFLOPS* |

1,979 teraFLOPS* |

| FP8 Tensor Core |

- |

3,026 teraFLOPS* |

3,958 teraFLOPS* |

| INT8 Tensor Core |

1248 TOPS* |

3,026 TOPS* |

3,958 TOPS* |

| GPU memory |

80GB HBM2e |

80GB HBM2e |

80GB HBM3e |

| GPU memory bandwidth |

2TB/s |

2TB/s |

3.35TB/s |

| Max thermal design power (TDP) |

400W |

300-350W (configurable) |

Up to 700W (configurable) |

| Interconnect |

NVLink: 600 GB/s

PCIe Gen4: 64 GB/s |

NVLink: 600GB/s

PCIe Gen5: 128GB/s |

NVLink: 900GB/s

PCIe Gen5: 128GB/s |

TABLE 1 - Technical Specifications NVIDIA A100 vs H100

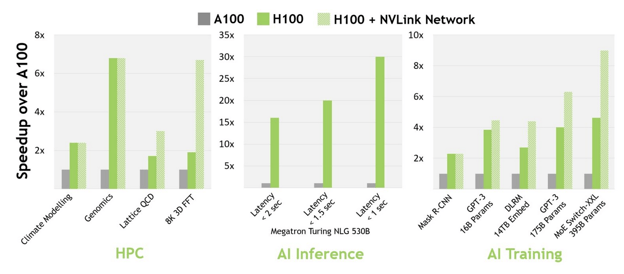

According to NVIDIA, the H100 performance can be up to 30x better for inference and 9x better for training. This comes from higher GPU memory bandwidth, an upgraded NVLink with bandwidth of up to 900 GB/s and the higher compute performance with the Floating-Points Operations per Second (FLOPS) of the H100 over 3x higher than those of the A100.

Tensor Cores: New fourth-generation Tensor Cores on the H100 are up to 6x faster chip-to-chip compared to A100, including per-streaming multiprocessor (SM) speedup (2x Matrix Multiply-Accumulate), additional SM count, and higher clocks of H100. Worth highlighting, the H100 Tensor Cores supports the 8-bit floating FP8 inputs which substantially increase speed at that precision.

Memory: The H100 SXM has a HBM3 memory that provides nearly a 2x bandwidth increase over the A100. The H100 SXM5 GPU is the world’s first GPU with HBM3 memory delivering 3+ TB/sec of memory bandwidth. Both the A100 and the H100 have up to 80GB of GPU memory.

NVLink: The fourth-generation NVIDIA NVLink in the H100 SXM provides a 50% bandwidth increase over the prior generation NVLink with 900 GB/sec total bandwidth for multi-GPU IO operating at 7x the bandwidth of PCIe Gen 5.

Performance Benchmarks

At launch of the H100, NVIDIA claimed that the H100 could “deliver up to 9x faster AI training and up to 30x faster AI inference speedups on large language models compared to the prior generation A100.” Based on their own published figures and tests this is the case. However, the selection of the models tested and the parameters (i.e. size and batches) for the tests were more favorable to the H100, reason for which we need to take these figures with a pinch of salt.

NVIDIA Benchmarking - NVIDIA H100 vs A100

Other sources have done their own benchmarking showing that the speed up of the H100 over the A100 for training is more around the 3x mark. For example, MosaicML ran a series of tests with varying parameter count on language models and found the following:

| Model |

PrecisioniH100 PCIe |

Throughput

(tokens/sec) |

TFLOPS |

Speedup over A100 @BF16 |

| 1B |

BF16 |

43,352 |

394 |

2.2x |

| 1B |

FP8 |

67 teraFLOPS |

489 |

2.7x |

| 3B |

BF16 |

67 teraFLOPS |

412 |

2.2x |

| 3B |

FP8 |

989 teraFLOPS* |

525 |

2.8x |

| 7B |

FP8 |

1,979 teraFLOPS* |

580 |

3.0x |

| 30B |

FP8 |

1,979 teraFLOPS* |

752 |

3.3x |

MosaicML Benchmarking - NVIDIA H100 vs A100

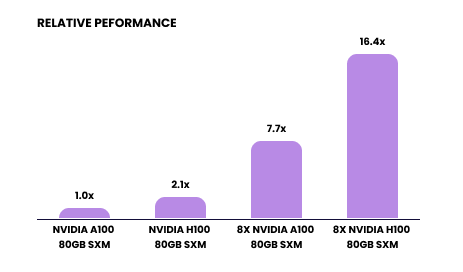

Lower improvements were obtained by LambaLabs when they tried to benchmark both GPUs when training a Large Language Model (GPT3-like model with 175B parameters) using FlashAttention2. In this case, the H100 performed ~2.1x better than the A100.

.

FlashAttention2 Training on a 175B LLM

Although these benchmarks provide valuable performance data, it's not the only consideration. It's crucial to match the GPU to the specific AI task at hand. Additionally, the overall cost must be factored into the decision to ensure the chosen GPU offers the best value and efficiency for its intended use.

Cost & Performance Considerations

The performance benchmarking shows that the H100 comes up ahead but does it make sense from a financial standpoint? After all, the H100 is regularly more expensive than the A100 in most cloud providers. For example at Ori, you can find A100s starting at $1.80 per hour while the H100 starts at $3.08 per hour (71% more expensive).

To get a better understanding if the H100 is worth the increased cost we can use work from MosaicML which estimated the time required to train a 7B parameter LLM on 134B tokens

|

GPU

|

GPU Hours to Train

|

Approx. Cost

|

|

8 x H100 (BF16)

|

5,220

|

$128,620.80

|

|

8 x H100 (FP8)

|

4,100

|

$101,024.00

|

|

8 x A100

|

11,462

|

$165,052.80

|

FlashAttention2 Training on a 175B LLM

If we consider Ori’s pricing for these GPUs we can see that training such a model on a pod of H100s can be up to 39% cheaper and take up 64% less time to train. Of course this comparison is mainly relevant for training LLM training at FP8 precision and might not hold for other deep learning or HPC use cases.

Get started with Ori

Get started at ori.co and get access to on-demand H100s, A100s and more GPUs from Ori Global Cloud (OGC). Alternatively, contact us and we can help you set up a private GPU cluster that matches your every need.