Today, there are plenty of options to run inference on popular generative AI models. However, several of these inference services are inflexible for business use cases or too complex and expensive. That’s where Ori Inference Endpoints comes in as an effortless and scalable way to deploy state-of-the-art machine learning models with dedicated GPUs. In this tutorial, we’ll learn how to create an interactive chatbot application, powered by Ori Inference Endpoints.

Effortless, Secure Inference at any Scale

Deploy the model of your choice: Whether it’s DeepSeek R1, Llama 3, Qwenor Mistral, deploying a multi-billion parameter model is just a click away.

Select a GPU and region. Unlock seamless inference: serve your models on NVIDIA H100 SXM, H100 PCIe, L40S or L4 GPUs, with H200 GPUs coming soon, and deploy in a region that helps minimize latency for your users.

Autoscale without limits: Ori Inference Endpoints automatically scales up or down based on demand. You can also scale all the way down to zero, helping you reduce GPU costs when your endpoints are idle.

Optimized for quick starts: Model loading is designed to launch instantly, making scaling fast, even when starting from zero.

HTTPS secured API endpoints: Experience peace of mind with HTTPS endpoints and authentication to keep them safe from unauthorized use.

Pay for what you use, by the minute: Starting at $0.021/min, our per-minute pricing helps you keep your AI infrastructure affordable and costs predictable. No long-term commitments, just transparent, usage-based billing.

Deploy your Chatbot with Ori Inference Endpoints

Prerequisites

Before you begin, make sure you:

- Spin up an Inference Endpoint on Ori. Note the endpoint's API URL and API Access Token.

- Have Python 3.10 or higher, and preferably a virtual environment.

- Install Gradio, Requests and OpenAI packages

pip install --upgrade gradio

Note: Gradio 5 or higher must be installed.

Step 1: Build Your Gradio bot

Here's the Python script to bring your chatbot to life. It connects to your Ori inference endpoint to process user messages. In our case, we deployed the Qwen 2.5 1.5B-instruct model to the Ori endpoint. Gradio handles the formatting of the model's response in a user-friendly and readable way, so you don't need to worry about restructuring the generated text.

Save the Python code below as chatbot.py

import os

from collections.abc import Callable, Generator

from gradio.chat_interface import ChatInterface

# API Configuration

ENDPOINT_URL = os.getenv("ENDPOINT_URL")

ENDPOINT_TOKEN = os.getenv("ENDPOINT_TOKEN")

if not ENDPOINT_URL:

raise ValueError("ENDPOINT_URL environment variable is not set. Please set it before running the script.")

if not ENDPOINT_TOKEN:

raise ValueError("ENDPOINT_TOKEN environment variable is not set. Please set it before running the script.")

try:

from openai import OpenAI

except ImportError as e:

raise ImportError(

"To use OpenAI API Client, you must install the `openai` package. You can install it with `pip install openai`."

) from e

system_message = None

model = "model"

client = OpenAI(api_key=ENDPOINT_TOKEN, base_url=f"{ENDPOINT_URL}/openai/v1/")

start_message = (

[{"role": "system", "content": system_message}] if system_message else []

)

streaming = True

def open_api(message: str, history: list | None) -> str | None:

history = history or start_message

if len(history) > 0 and isinstance(history[0], (list, tuple)):

history = ChatInterface._tuples_to_messages(history)

return (

client.chat.completions.create(

model=model,

messages=history + [{"role": "user", "content": message}],

)

.choices[0]

.message.content

)

def open_api_stream(

message: str, history: list | None

) -> Generator[str, None, None]:

history = history or start_message

if len(history) > 0 and isinstance(history[0], (list, tuple)):

history = ChatInterface._tuples_to_messages(history)

stream = client.chat.completions.create(

model=model,

messages=history + [{"role": "user", "content": message}],

stream=True,

)

response = ""

for chunk in stream:

if chunk.choices[0].delta.content is not None:

response += chunk.choices[0].delta.content

yield response

ChatInterface(

open_api_stream if streaming else open_api,

type="messages",

).launch(share=True)

Step 2: Set the ENDPOINT_TOKEN and ENDPOINT_URL environment variables:

export ENDPOINT_TOKEN="your_api_token"

export ENDPOINT_URL="your_url"

python chatbot.py

Step 4: Open the Gradio link provided in your browser

See your chatbot in action

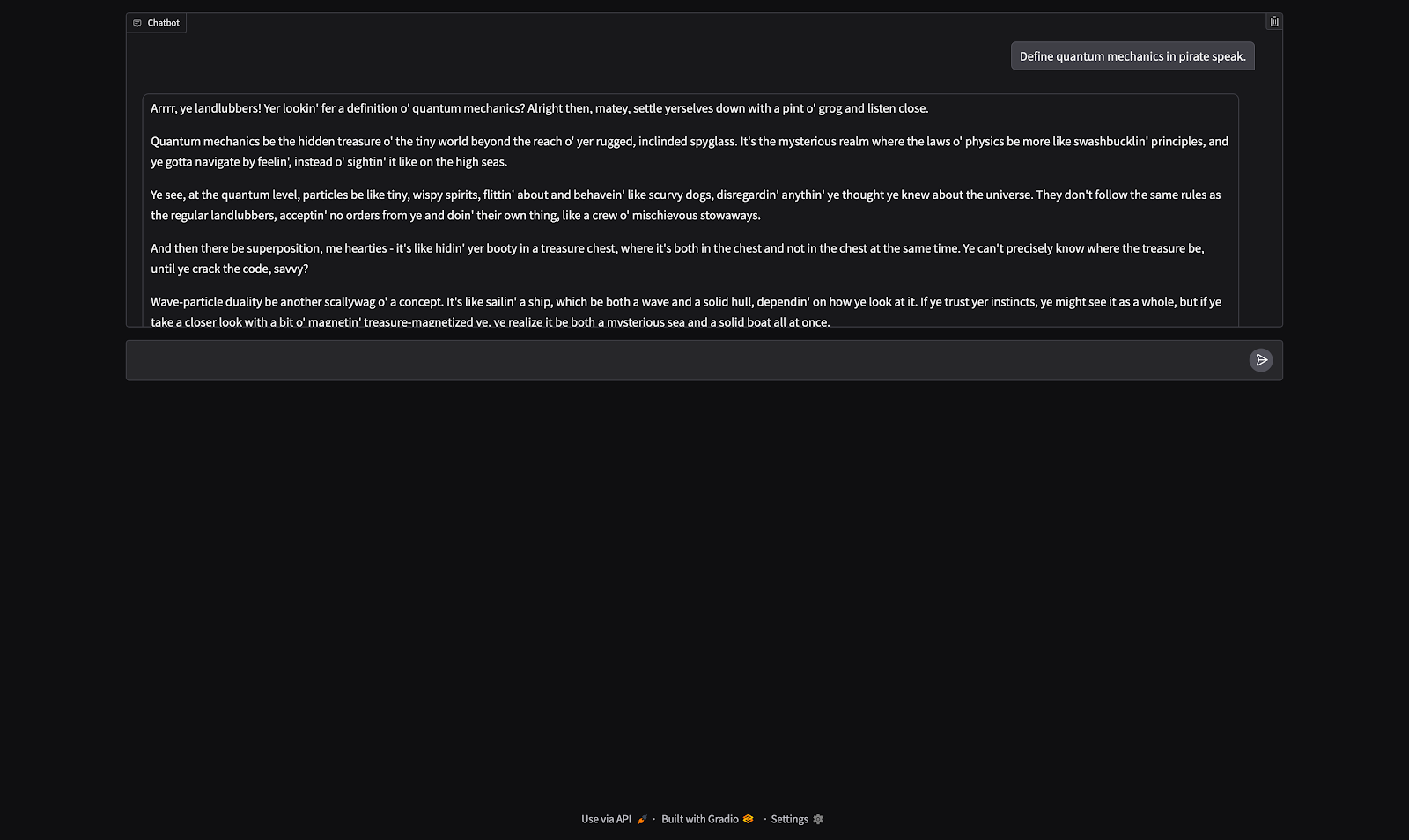

Once your chatbot is live, it will look something like this:

The input box allows you to type messages and the area below it displays the conversation.

Run limitless AI Inference on Ori

Serve state-of-the-art AI models to your users in minutes, without breaking your infrastructure budget.