Alibaba Cloud’s Qwen 2.5 foundation model family has become one of the most popular open source LLMs with model downloads topping 40 million and more than 50,000 derivative models. Qwen 2.5 offers coding, math and generalist text-generation variants in various model sizes, and with support for 29 languages, it provides plenty of choice for different use cases.

Here’s a summary of the Qwen 2.5 specs and performance benchmarks:

| |

Qwen 2.5 1.5B |

|

Architecture

|

Transformers with RoPE, SwiGLU, RMSNorm, Attention QKV bias and tied word embeddings

|

|

Parameters

|

1.54B (Non-Embedding: 1.31B) |

|

Model Variants

|

2.5, 2.5 Coder, 2.5 Math

|

|

Context Length / Generation Length

|

Full 32,768 tokens and generation 8192 tokens

|

|

Licensing

|

|

The 1.5B variant of Qwen 2.5 is an impressive small language model (SLM) that outperforms equivalent SLMs such as Gemma2 2.6B.

|

Datasets

|

Qwen 2.5 1.5B

|

Gemma2-2.6B

|

|

MMLU

|

60.9

|

52.2

|

|

MMLU-pro

|

28.5

|

23.0

|

|

MMLU-redux

|

58.5

|

50.9

|

|

BBH

|

45.1

|

41.9

|

|

ARC-C

|

54.7

|

55.7

|

|

TruthfulQA

|

46.6

|

36.2

|

|

Winogrande

|

65.0

|

71.5

|

|

Hellaswag

|

67.9

|

74.6

|

How to run Qwen 2.5 1.5B with autoscaling on Ori Inference Endpoints

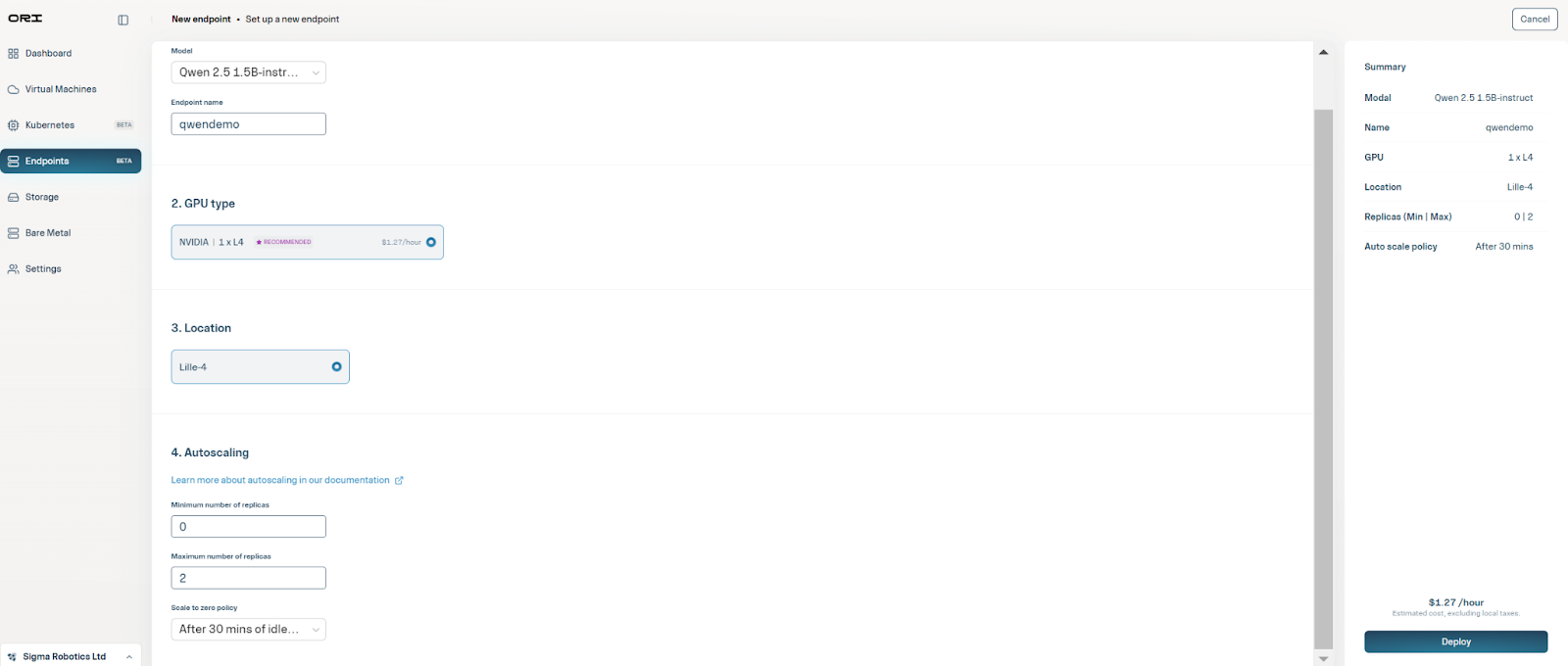

Ori Inference Endpoints is an easy and scalable way to deploy state-of-the-art machine learning models as API endpoints. Here’s how you can deploy and scale Qwen 2.5 on Inference Endpoints effortlessly.

Step 2: Select Qwen 2.5 1.5B from the model drop down list.

Step 3: Choose Compute Resources

- Select a GPU of your choice to host the model. Not sure which GPU suits your needs? We’ll recommend one, helping you balance model performance and GPU costs.

- Pick a data center location where you want to deploy the model.

Step 4: Ori Inference Endpoints enables you to scale your inference up or down automatically. Configure autoscaling:

- Specify the minimum and maximum number of GPU replicas you expect to need to serve your requests. You can scale down to zero by setting the minimum replicas to reduce GPU costs when endpoints are idle.

- Set a time for Endpoints to automatically turn down to zero after being idle.

Step 5: Hit Deploy and your Inference Endpoint will be ready shortly!

Here’s a snapshot of the Endpoint creation flow:

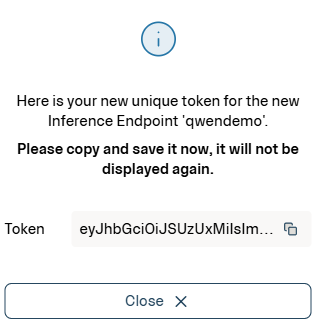

How to access your Qwen 2.5 1.5B endpoint

As soon as your endpoint is created, you are provided with an Authorization Token. Accessing inference endpoints requires your authorization token to ensure secure access through the Authorization Header.

You'll be able to see a sample cURL command under the endpoint details. Customize your prompt if needed and run the command from your terminal:

export ACCESS_TOKEN='<authorization-token>'

export ACCESS_URL='<endpoint-url>'

curl -H "Content-Type: application/json" \

-H "Authorization: Bearer $ACCESS_TOKEN" \

-d '{"model": "model","messages":[{"role": "user", "content": "Give me a concise summary of the Collatz Conjecture"}],"max_tokens": 200,"stream": false}' \

"$ACCESS_URL/openai/v1/chat/completions"

To extract the structured outputs, let’s format the JSON response from the model with the “jq” utility.

sudo apt install jq

curl -H "Content-Type: application/json" -H "Authorization: Bearer $ACCESS_TOKEN" -d '{"model": "model","messages":[{"role": "user", "content": "Give me a concise summary of the Collatz Conjecture"}],"max_tokens": 200,"stream": false}' "$ACCESS_URL/openai/v1/chat/completions" | jq -r '."choices"[0]."message"."content"'

Voila! The model response is now ready in your terminal, fully formatted!

Run limitless AI Inference on Ori

Serve state-of-the-art AI models to your users in minutes, without breaking your infrastructure budget.