Unveiling a New Benchmarking Framework from Ori

Access BeFOri for LLama2 and LLama3 Benchmarks on Nvidia V100s and H100 Chips

Benchmarking llama 3.1 8B Instruct with vLLM using BeFOri to benchmark time to first token (TTFT), inter-token latency, end to end latency, and throughput

When it comes to running large language models (LLMs), performance and scalability are key to achieving economically viable speeds. This is why popular inference engines like vLLM and TensorRT are vital to production scale deployments . Designed for speed and ease of use, open source vLLM combines parallelism strategies, attention key-value memory management and continuous batching to get the most out of your hardware. Namely high-throughput, low-latency, and scalability that is competitive with API providers with the added benefit of data privacy when self-hosting on Ori.

To demonstrate the power of vLLM we ran dozens of benchmarks using BeFOri, the Benchmarking Framework from Ori, with one of the most popular open source models available today and top of the line Nvidia chips in Ori’s public cloud. These benchmarks of Llama 3.1 8B Instruct on Nvidia H100 SXM and A100 chips measure the 3 valuable outcomes of vLLM:

Why did we choose NVIDIA H100 SXM and A100 chips? There is a lot of excitement around NVIDIA’s release of the H200 and Blackwell chips, which are both expected over the next year, but the H100 and A100 are the best chips available on the market today.

You can rent a virtual machine with one NVIDIA H100 on Ori’s Public Cloud for $3.24/hr or one NVIDIA A100 for $2.74/hr. Ori also offers H100 chips through our Serverless Kubernetes offering for $4.16/hr. The H100 chip offers higher GPU memory bandwidth, an upgraded NVLink, and higher compute performance with 3x the Floating-Points Operations per Second (FLOPS) of the A100. Check out our blog post for a deeper dive into the trade-offs between these chips.

This often leaves our customers wondering, is the 18% price increase over the A100 worth the superior power of the H100? Using BeFOri, we can tailor the answer to this question to the specifics of your use case. For example, with Meta’s Llama 3.1 8B Instruct, you can optimise for low latency in a real-time chat bot with up to 16 concurrent requests on the H100 SXM. However, if you’re looking to generate long responses with brief prompts in batches, you’ll want to send at least 128 concurrent requests to the A100 chip to achieve the lowest cost solution.

If you have a use case in mind and want to get the best performance at the lowest possible cost, contact the Ori Sales Team to discuss how we can help you run custom benchmarks.

Why Meta’s Llama 3.1 8B Instruct Model? The Meta family of models are quickly becoming the standard for open source LLMs, and for good reason. The models are very well documented, supported by every mainstream library and framework, and have a ton of tutorials available (including my previous blog post on running Llama 3 7B Base with the HuggingFace Transformers Library).

The HuggingFace Open LLM Leaderboard ranks fine-tuned versions of the Llama3.1 8B Instruct model the highest among models with 13B parameters or less, and Meta’s release of the Instruct model is ranked 38th overall (at the time of writing). The 8B Instruct model has been downloaded over 3.1 million in the last month on HuggingFace, making it the most popular within the Llama Family.

Llama3.2 was released last week, but its quality benchmarks have not yet been published by HuggingFace. The team at Ori is hard at work incorporating speed benchmarks for Llama3.2 into future benchmarking blogs, so stay tuned!

Once you have signed up to Ori's Public Cloud, and requested access to the Meta Llama3.1 models, you can create the a VM on Ori's Public Cloud. When you reach the option to add an init script, copy and paste the appropriate script from below:

|

Init Script for H100 SXM VM: |

Init Script for A100 VM: |

| #!/bin/bash sudo apt update && # Install Nvidia Cuda Toolkit 12.1 sudo apt upgrade wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.1-1_all.deb && sudo dpkg -i cuda-keyring_1.1-1_all.deb && sudo apt-get update && sudo apt-get -y install cuda-toolkit-12-1 && sudo apt-get install -y nvidia-driver-555-open && sudo apt-get install -y cuda-drivers-555 && echo "blacklist nvidia_uvm" | sudo tee /etc/modprobe.d/nvlink-denylist.conf && echo "options nvidia NVreg_NvLinkDisable=1" | sudo tee /etc/modprobe.d/disable-nvlink.conf && sudo apt install nvidia-cuda-toolkit && sudo update-initramfs -u && sudo apt upgrade && sudo reboot | #!/bin/bash sudo apt update && # Install Nvidia Cuda Toolkit 12.1 sudo apt upgrade wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.1-1_all.deb && sudo dpkg -i cuda-keyring_1.1-1_all.deb && sudo apt-get update && sudo apt-get -y install cuda-toolkit-12-1 && sudo apt-get install -y nvidia-driver-555-open && sudo apt-get install -y cuda-drivers-555 && sudo apt install nvidia-cuda-toolkit && sudo update-initramfs -u && sudo apt upgrade && sudo reboot |

It will take up to 10 minutes for your machine to be provisioned and become available. You can copy the ssh command directly from the Ori Console to connect to your machine, and then run the following commands:

|

# Verify installation

|

When you deploy the vLLM app it will begin downloading the model from HuggingFace using your Access Token. You can disregard the warning about a long running init script. While you're waiting, open another terminal window and a second connection to your VM. Once the vLLM app has finished deploying, run the following commands in the new window:

| # Test befori is working with a small benchmark python3 token_benchmark_ray.py --model "meta-llama/Meta-Llama-3.1-70B-Instruct" --mean-input-tokens 64 --stddev-input-tokens 8 --mean-output-tokens 128 --stddev-output-tokens 8 --max-num-completed-requests 2 --timeout 210000 --num-concurrent-requests 1 --results-dir "result_outputs" --llm-api local-vllm # Run a series of benchmarks # Update /BeFOri/src/samle_batch.yaml with configurations screen python3 token_benchmark_ray.py --batch-config-file ~batch_config.yaml |

You can use the following file for the batch_config.yaml

- model: "meta-llama/Meta-Llama-3.1-8B-Instruct" |

Finally, you can reconnect to the machine and resume your screen session to check the status, or simply print the results in ~/BeFOri/results_outputs/

| # When you reconnect later ot see the results # List running screens screen -ls # Replace 1234 with the reference for the instance you want to attach to screen -r 1234 # Detatch from screen # Control+a, then d |

Below is a summary of the benchmarking results. All results were run on Meta’s Llama 3.1 8B Instruct model deployed with vLLM. Purple indicates results for H100 SXM chips, and pink for A100 chips. Where applicable, a lighter shade of each colour is used for input token processing. Finally, light red is used to to indicate the optimal number of concurrent requests with respect to overall token throughput.

Lower is Better

Figure 1: Time to First Token & End to End Latency for Llama 3.1 8B Instruct on NVIDIA H100 SXM and A100 SXM Chips with vLLM

As expected, the H100’s performance was better than the A100 for both TTFT and End-to-End Latency benchmarks.

On average, the A100 took 6x longer to generate the first token than the H100, with the largest differential at 16 concurrent requests where the H100 was 16x faster. The smallest differences were at 1 and 128 concurrent requests where the H100 was 2.6x faster than the A100.

The differences between the H100 and A100 performance for End-to-End Latency is less pronounced with the H100 performing 1.6x faster on average. The largest differential was again at 16 concurrent requests where the H100 was 2.3x faster. The smallest differential was at 1 concurrent request where the H100 was 1.3x faster than the A100.

Figure 2: Time to First Token as a Percentage of End-to-End Latency for Llama 3.1 8B Instruct on NVIDIA H100 SXM and A100 SXM Chips with vLLM

The Time to First Token as a percentage of the End-to-End Latency indicates the ratio of time spent processing the input prompt vs generating new tokens. This graph illustrates that the H100 is more efficient at processing input prompts than the A100 across the board. This indicates that the H100 would be even more economical than the A100 for summarisation and other use cases with longer prompts.

Lower is Better

Figure 3: Inter-Token Latency with standard deviation error bars for Llama 3.1 8B Instruct on NVIDIA H100 SXM and A100 SXM Chips with vLLM

The Inter-token Latency is similar for both the A100 and H100 chips up through 16 concurrent requests. The graph above includes error bars displaying +/- 1 standard deviation from the mean, indicating the difference between the H100 and A100 chips within this range is not statistically significant. The green line indicates the average reading speed of 0.025 seconds per word, the threshold typically used for what a real-time user will perceive as a fast generating LLM.

Surprisingly, at 32 concurrent requests and above, the H100 chip faces a steep increase to 0.04 to 0.05 seconds per token meaning there was much more variability between tokens. This could be an indication of an anomaly during benchmarking where a few tokens generated very slowly pulling the average higher than expected.

Higher is Better

Figure 4: Token Throughput for Llama 3.1 8B Instruct on NVIDIA H100 SXM and A100 SXM Chips with vLLM

To calculate token throughput, the number of tokens generated is divided by the time it took to generate them. This can be measured in 2 ways:

For the H100, 16 concurrent requests achieves optimal throughput with a maximum throughput of 8.01 tokens per second. The A100 chip is optimal at 64 concurrent requests with a maximum throughput of 2.61 tokens per second, which is nearly the same at 128 concurrent requests.

The creators of vLLM from UC Berkeley identified memory as the primary bottleneck impeding LLM performance. Specifically, the attention key value tensors are cached in GPU memory, known as the KV cache, as each token is generated. This is both large and dynamic, creating a challenging memory management problem that attention algorithms address through batching.

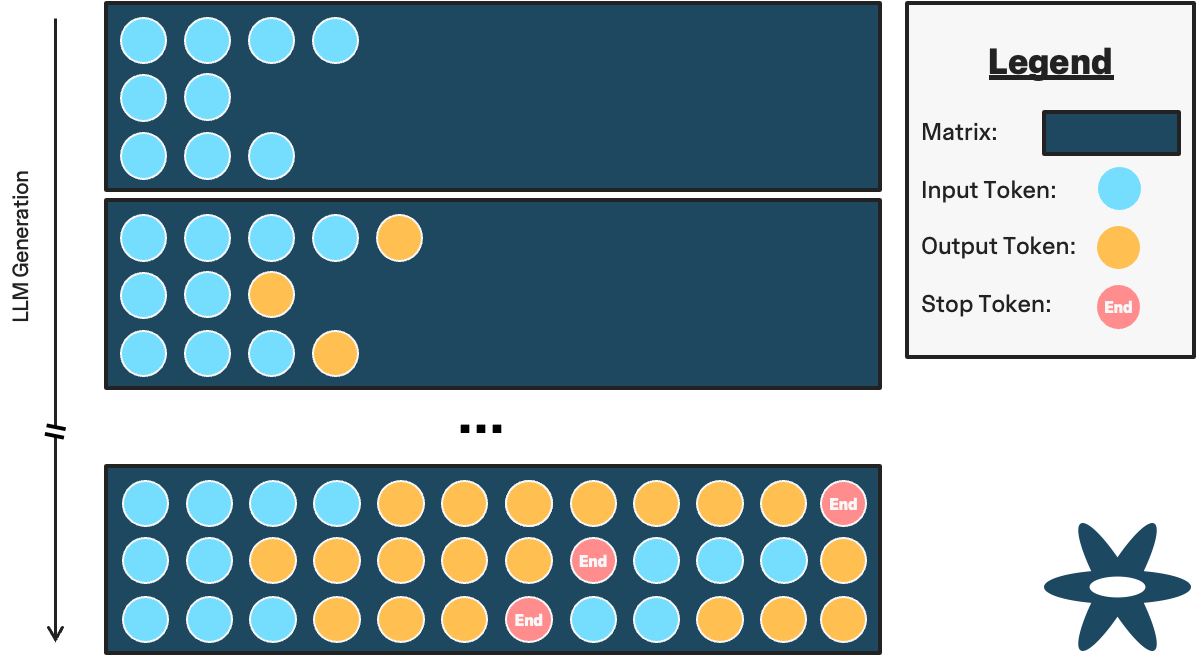

Below is a representation of the vectors of tokens in LLM generation without batching. The input prompt is sent to the tokenizer and transformed into a vector of tokens. The LLM then iteratively generates each progressive token until a "stop" token is generated. This is then sent back to the tokenizer to be transformed from tokens into words.

Figure 5: LLM generation without batching

Without batching, each prompt requires its own copy of the model weights, which memory bounds the number of concurrent requests and slows down generation. However, batching many requests together takes the underlying computations from vector multiplication, which is an inefficient use of GPUs, to large matrix multiplications. Ultimately this reduces vRAM requirements for concurrent requests and speeds up response times. This strategy is particularly useful for applications like chatbots or recommendation systems that handle numerous small requests in varying loads.

Figure 6: LLM Generation with Static Batching

In traditional static batching, the batch size is fixed, meaning responses with fewer tokens will complete earlier and then continue to hold their resources until the longest response in the batch is complete. This is certainly an improvement over loading an additional copy of the model into memory for each request, but it is also far from maximising chip utilisation.

Figure 7: LLM Generation with Continuous Batching

PagedAttention improves on this concept by introducing just-in-time buffer allocation to achieve continuous batching. This is enabled through the allocation of non-contiguous memory as it is needed, without performance degradation. It applies the concepts of virtual memory and paging from operating systems to batching. The KV cache is partitioned into blocks and then PagedAttention identifies and fetches these blocks efficiently during inference.

While this overview should give you a working understanding of PagedAttention, you can find additional technical details behind PagedAttention’s implementation in this paper.

These benchmarking results utilised a single A100 and H100 chip to measure the improvement in performance with vLLM. However this is only analysing 1 aspect of the optimisations offered within the vLLM framework. Larger models can leverage tensor and pipeline parallelism to achieve additional optimisations.

Figure 8: Visualisation of Model and Tensor Parallelism

Tensor parallelism splits a model that is too large for a single GPU across multiple GPUs in a single node. vLLM enables the deployment of massive models without pushing GPU memory to its limits through the tensor-parallel-size parameter. vLLM ensures that the computational workload is evenly distributed, avoiding bottlenecks that can cripple performance.

For models too large to fit on multiple GPUs in a single node, pipeline parallelism can be combined with tensor parallelism to distribute the inference workload further. vLLM breaks down the model along layers and runs them on separate GPUs in tandem. Each GPU processes its stage while others are hard at work, ensuring that none of your GPUs are sitting idle.

Self-hosting is all about control—over your data, your costs, and your infrastructure. With vLLM, you’re not just getting a tool to run large models. You’re getting a streamlined, efficient, and scalable solution that can handle everything from simple batch processing to real-time applications utilising the largest LLMs available today across multiple GPUs and nodes.

In a world where every penny and every millisecond counts, vLLM makes self-hosting both cost effective on Ori’s GPUs AND easy on your developers!

Access BeFOri for LLama2 and LLama3 Benchmarks on Nvidia V100s and H100 Chips

An end to end Tutorial using Ori's Virtual Machines, Llama3.1 8B Instruct, and FastAPI for speedy batch inference with TensorRT LLM.

Discover how to use BeFOri to calculate a cost per input and output token for self hosted models and apply this methodology to the DBRX Base model...