Benchmarking Llama 3.1 8B Instruct on Nvidia H100 and A100 chips with the vLLM Inferencing Engine

Benchmarking llama 3.1 8B Instruct with vLLM using BeFOri to benchmark time to first token (TTFT), inter-token latency, end to end latency, and...

Discover how to use BeFOri to calculate a cost per input and output token for self hosted models and apply this methodology to the DBRX Base model from Databricks.

With the explosive growth of new AI applications, many companies are faced with uncertainty around what costs they will incur to bring their wildest AI dreams to market. At Ori we are partnering with many of our customers to help them transition from the training stage of development into production deployed inference applications. Developers leveraging open source models have to choose between accessing these models through pay-per-token APIs or hosting the model themselves. Each choice comes with its own set of trade-offs but cost is the deciding factor for most. In a recent survey we conducted, 54% of respondents reported that price is the most important factor, and 77% put price in their top 3 factors.

To this end, we have observed that self-hosting typically becomes the lower cost option when an application has scaled to the point that the GPUs are highly utilised, or used in offline batching applications. While self-hosting provides less flexibility in scaling up (unless you’re building with Ori's serverless Kubernetes offering), the costs can be predicted with far more certainty up to the capacity limit. This is one of the reasons we have been hard at work on our Serverless Kubernetes service to provide a deployment solution that enables customers to only pay for the infrastructure they require. If you’re interested in getting started with Ori’s Kubernetes offering, get in touch with us.

In the meantime, we will review a methodology for comparing self-hosted (virtual or bare metal machines) and pay-per-token APIs and apply this to the recently released DBRX mixture of experts model from Databricks.

Before we dive into the cost analysis, let’s consider a typical architecture for AI applications that underpin some of the assumptions we use for the cost analysis. To make the most efficient use of resources, and following the best practices around microservice architecture, applications should be designed to separate the application layer from the LLMs they utilise.

While this is the de facto result of leveraging a third party API for your LLM, when self hosting this is the most common design we have seen deployed. A simplified architecture diagram is provided below to illustrate this approach. This separation of the AI infrastructure from traditional general purpose cloud deployments allows us to calculate the cost of running the LLM independent of the cost of running the full application. By prorating this cost per input and out token, we are able to make an apples-to-apples comparison.

The monolithic alternative with the application running on the GPU alongside the LLM typically results in a CPU bottleneck limiting the performance of the LLM and driving up infrastructure costs unnecessarily. This also makes it difficult to decouple the cost of the application from the LLM, obscuring improvements from optimisations. The trade off here becomes the added latency from the transfer of data between the clouds, similar to the latency experienced with calling a third-party API. Fortunately these can be negligible relative to the time required for inference, and you have the opportunity to optimise this latency within your application when self-hosting.

Here we walk through the steps to calculate the cost-per-token for a self-hosted model. The alternative is calculating the cost per month for calling an API provided model. We opt for the former because an API cost analysis will be very sensitive to the number of calls, length of the actual prompts sent, and lenth of responses received after deploying.

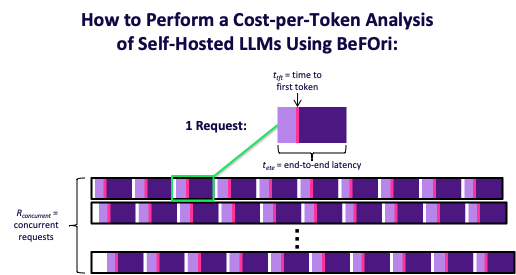

The primary challenge is allocating the total time to run the benchmark between input and output tokens while also accounting for the concurrent requests. This visualisation depicts a BeFOri run (not to scale):

Each row represents a thread of requests to the model. The white gaps represent a new request being made, and the pink lines indicate the time when the first token is returned by the model. We allocate the time before the first token is generated (light purple) to the cost of processing the input tokens and the remaining time (dark purple) to the cost of generating the output tokens.

Databricks released their open-source mixture of experts DBRX model on 27 March 2024. Both the base model and the fine-tuned Instruct version for few-turn interactions boast 132 B total parameters and 16 experts, and 2 experts active on any input. This is considered a fine-grained mixture of experts because it uses a larger number of smaller experts. DBRX was pretrained on 12 T tokens of curated data with a maximum context window of 32k tokens. They leveraged the Databricks stack of tools with 3072 NVIDIA H100 chips connected by an Infiniband.

This approach has paid off for the team at Databricks, achieving an average score of 74.47 for DBRX Instruct and 71.90 for DBRX Base on the HuggingFace Legacy Open LLM Leaderboard. Last week HuggingFace updated their Open LLM Leaderboard, and DBRX Instruct scored 25.58 under the new scoring (DBRX Base's new score is not yet available). Databricks also reported that DBRX Instruct surpassed other leading open source models including LLaMa2-70B, Mixtral, and Grok-1 in Language Understanding (MMLU), Programming (HumanEval), and Math (GSM8K). They also report DBRX Instruct outperforms GPT-3.5 and is competitive with Gemini 1.0 Pro and Mistral Medium when compared to leading closed models.

API access to DBRX is currently only available to Databricks Mosaic AI platform customers. However the Base and Instruct models are available to download on HuggingFace, along with a model repository on GitHub all under an open licence.

The following tutorial will walk you through the steps to run DBRX Base on Ori’s 8xH100 bare metal servers. Ori offers this with both the PCIe and SXM module form factors. SXM offers NVLink technology providing higher interconnect bandwidth at a slightly higher price.

This can be adjusted for deployment on smaller virtual machines by ensuring the Nvidia driver version installed is within the list of compatible driver versions.

A few things to note:

|

# SSH into your Ori VM, the ip address can be found in the Ori console # check compatible driver versions # uninstall nvidia drivers # uninstall cuda # Install nvidia driver # Install Cuda Toolkit # Install ppa # Install fabric manager # verify fabric manager installed # start fabirc manager # reboot the machine # wait before reconnecting # install screen # Clone BeFOri and install dependencies export PYTHONPATH="/root/BeFOri/src/" # start screen # Run benchmark # Ctrl-A then D to get out of your screen # Check running screens |

Below we apply the methodology described above to demonstrate its use.

- model: "databricks/dbrx-base" |

|

# start screen # run benchmark # Ctrl-A then D to get out of your screen # Check running screens # Resume running screen |

|

cat /BeFOri/results_outputs/databricks-dbrx-base_64_128_512_summary.json |

|

Concurrent Requests (Rconcurrent) |

1 |

2 |

4 |

8 |

16 |

24 |

32 |

|

Mean Input Tokens per Request |

65.1000 |

64.2000 |

64.5500 |

63.7625 |

63.3813 |

63.6125 |

63.7531 |

|

Time to First Token (ttft) |

47.4256 |

60.1374 |

56.4676 |

50.0456 |

51.3750 |

53.6781 |

61.0651 |

|

Input Token Throughput |

1.3727 |

2.1351 |

4.5725 |

10.1927 |

19.7392 |

28.4417 |

33.4086 |

|

Price Per |

0.005621 |

0.003614 |

0.001687 |

0.000757 |

0.000391 |

0.000271 |

0.000231 |

|

Concurrent Requests (Rconcurrent) |

40 |

48 |

56 |

64 |

128 |

256 |

512 |

|

Mean Input Tokens per Request |

63.7125 |

64.0083 |

63.9214 |

63.8844 |

63.7398 |

63.6738 |

63.6953 |

|

Time to First Token (ttft) |

63.2785 |

64.7879 |

74.3808 |

83.8888 |

202.5574 |

425.9254 |

1051.1940 |

|

Input Token Throughput |

40.2743 |

47.4224 |

48.1253 |

48.7383 |

40.2785 |

38.2708 |

31.0238 |

|

Price Per |

0.000192 |

0.000163 |

0.000160 |

0.000158 |

0.000192 |

0.000202 |

0.000249 |

7. Use these metrics to calculate the output token throughput (Tout) and the cost per output token:

|

Concurrent Requests (Rconcurrent) |

1 |

2 |

4 |

8 |

16 |

24 |

32 |

|

Mean Output Tokens per Request (Nout) |

121.1000 |

135.5000 |

143.0000 |

146.9000 |

148.5688 |

147.1583 |

148.0688 |

|

End-to-End Latency |

611.1326 |

1005.3901 |

1044.7683 |

1025.8838 |

1051.9588 |

1066.1931 |

1126.9538 |

|

Time to |

47.4256 |

60.1374 |

56.4676 |

50.0456 |

51.3750 |

53.6781 |

61.0651 |

|

Output Token Throughput |

0.2148 |

0.2867 |

0.5788 |

1.2043 |

2.3757 |

3.4881 |

4.4453 |

|

Price Per |

0.0359173 |

0.0269137 |

0.0133318 |

0.0064071 |

0.0032479 |

0.0022121 |

0.0017358 |

|

Concurrent Requests (Rconcurrent) |

40 |

48 |

56 |

64 |

128 |

256 |

512 |

|

Mean Output Tokens per Request (Nout) |

121.1000 |

135.5000 |

143.0000 |

146.9000 |

148.5688 |

147.1583 |

148.0688 |

|

End-to-End Latency |

611.1326 |

1005.3901 |

1044.7683 |

1025.8838 |

1051.9588 |

1066.1931 |

1126.9538 |

|

Time to |

47.4256 |

60.1374 |

56.4676 |

50.0456 |

51.3750 |

53.6781 |

61.0651 |

|

Output Token Throughput |

0.2148 |

0.2867 |

0.5788 |

1.2043 |

2.3757 |

3.4881 |

4.4453 |

|

Price Per |

0.0359173 |

0.0269137 |

0.0133318 |

0.0064071 |

0.0032479 |

0.0022121 |

0.0017358 |

Below you can see a graph of the cost per input and output token as the number of concurrent requests increases.

While the 8xH100 bare metal server can support over 1,000 concurrent requests to DBRX Base, we can see that the optimal cost is near 64 concurrent requests because the overall throughput declines above this. The minimum price per token we were able to achieve was $0.000158 per input token or $158.32 per million input tokens and $0.001259 per output token or $1,259.35 per million output tokens. It is also notable that the cost does not increase much in the range from 40 concurrent requests to 256 concurrent requests, providing a target utilisation range for self hosting.

While Databricks has not made their cost per token for the API access to DBRX publicly available (and declined to respond when I requested this information), these prices are higher than Open AI’s prices for GPT-4 Turbo, one of the most expensive models available today. This means self-hosting without further inference optimisation is likely not the lowest cost option. Databricks has partnered with Nvidia to implement TensorRT to decrease latency and provided an example in the TensorRT-LLM Github repo. While they do not publish metrics around how much TensorRT has sped up inference on DBRX, Nvidia has reported a 4x - 8x increase in inference performance with other models.

For those who prioritise data privacy and sovereignty, self-hosting is still the best option, despite the higher cost. At Ori we work with customers to build custom private clouds and we offer VMs, bare metal servers and serverless Kubernetes through our public cloud where you can fine-tune and run inference without exposing your data to model providers such as Databricks.

We’ve now covered how to use BeFOri to calculate a cost per input and output token for self hosted models and applied this methodology to the DBRX Base model from Databricks. We hope this provides a helpful tutorial for anyone interested in performing a similar cost analysis or running DBRX themselves.

One major critique we see of this analysis is the short prompt length (63.9 tokens on average) and short response lengths (144.1 tokens on average). These decisions were made to decrease the time to run benchmarks as this is meant illustrate the methodology. We are hard at work on providing future benchmarks with a more realistic input prompts and longer response lengths to provide more usable benchmarks. Part of this effort includes improving the prompt sampling in BeFOri to move away from randomly selecting from a repository of Shakespearean sonnets to more realistic prompts. Beyond these improvements, we are also looking to integrate inferencing engines like TensorRT into BeFOri so we can benchmark the optimisation and verify Nvidia's results. Stay tuned for new blog posts and content with these updates.

That being said, our analysis did determine for DBRX Base running on a 8xH100 PCIe bare metal server, you should aim to run between 40 and 256 concurrent requests for optimal cost and throughput. Within this range you can expect to pay $158 to $202 per million input tokens and $1,259 - $1,489 per million output tokens.

Benchmarking llama 3.1 8B Instruct with vLLM using BeFOri to benchmark time to first token (TTFT), inter-token latency, end to end latency, and...

Ready to experience the Snowflake-Arctic-instruct model with Hugging Face? In this blog we are going to walk you through environment setup, model...

Access BeFOri for LLama2 and LLama3 Benchmarks on Nvidia V100s and H100 Chips