Choosing between NVIDIA H100 vs A100 - Performance and Costs Considerations

When should you opt for H100 GPUs over A100s for ML training and inference? Here's a top down view when considering cost, performance and use case.

In this blog, I explore the challenges AI companies face when using Kubernetes for optimising GPU usage in Multi-cloud environments and how Ori helps them on their journey to manage growing costs and reducing complexity.

Companies looking to leverage Artificial Intelligence (AI) are wrestling with the shortage of GPUs and the intricate management of workloads, specially when deploying to multiple environments. Many have turned to Kubernetes to help solve these issues. But Kubernetes comes with its own set of challenges. In this article we explore why Kubernetes has been used to solve challenges faced by AI companies and further explore the difficulties that come with its use.

The popularity of ChatGPT since November 2022 has led to a huge surge in investment interest in similar Generative AI companies, with the entire AI industry market size estimated to double to $400B by 2025. However, the core fundamental piece of AI industry’s infrastructure — Graphic Processing Units (GPUs) are in short supply. As a result, budding AI start-ups have to constantly compete for GPU usage slots from various cloud service providers.

This brings another layer of challenge and complexity, namely the deployment and management of training models and workloads on cloud, across different environments, architectures, platforms, and clusters. Machine learning engineers and CTOs have been turning to Kubernetes to manage their multiple clusters and multi-cloud operations.

AI companies’ workloads are compute intensive, heavily reliant on harnessing the power of GPUs to train their computing-intensive models effectively and efficiently. Much of the fundamental challenges faced by AI companies are addressed by Kubernetes’ ability to deliver repeatability, reproducibility, portability, and flexibility across diverse environments and libraries.

Kubernetes can have multiple uses for AI companies, such as being used to scale a multiple GPUs setup for large-scaled Machine Learning projects (see for example Open AI), manage deployments on GPU cluster, and also implement batch inferences. Major GPU manufactures such as NVIDIA and AMD currently support GPU scheduling on Kubernetes, but also require the use of vendor-provided drivers and device plugins.

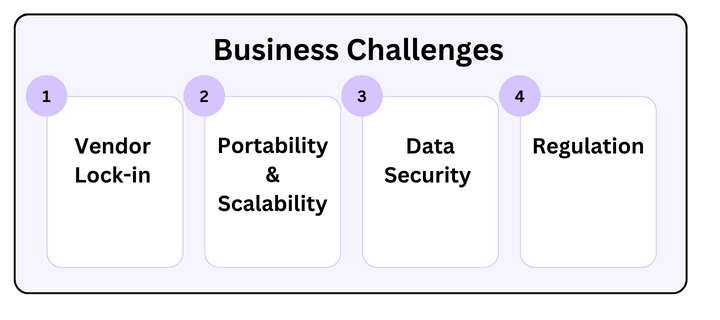

However, the application of Kubernetes in the AI industry is not without its own set of unique challenges. The core challenges businesses will face can be classified into three categories:

.png?width=720&height=320&name=Untitled%20(720%20%C3%97%20320px).png)

On the cloud platform side, major cloud platforms such as Google, Amazon, and Microsoft all provide their own version of managed Kubernetes services for clients to leverage on GPU-powered compute instances. Each managed Kubernetes service has its own nuanced differences from the other, in terms of set-ups, rules, and environments.

Hence, managing a multi-cloud platform service would call for the specific understanding, knowledge, and accreditation of each cloud platform. However, most AI companies are not networking nor infrastructure experts, and the amount of time and resources on training and accreditation with just one cloud-platform can take anywhere between 3-6 months. Thus, in-house development of multi-cloud talent requires high initial investment and the results are not guaranteed afterwards.

When an AI company managed to assemble a team to handle the multiple cloud platforms, establishing cross platform communication and networking is the next challenge. Kubernetes in a single cloud platform is already notoriously challenging in the following ways:

While Kubernetes does provide powerful capabilities for managing containerized applications at scale, these challenges only manifested themselves again with added intensity and complexity in multi-cloud/multi-environment settings, thus making multi-cloud even more daunting to users and managers.

A key issue for multi-cloud Kubernetes management is the networking and communication between different cloud platforms. Each platform has its own unique set of APIs, configurations, services, and best practices. In addition to this, the multi-cloud environment can also make standardizing operations, monitoring, logging, and debugging more difficult due to the variations in the offerings of different cloud providers.

Configuring network policies, VPNs, and managing traffic between different cloud providers is complex and error-prone. Each cloud provider may also have its own unique networking infrastructure and limitations, which can further complicate the setup.

In AI-specific instances, different cloud platforms have different GPU cluster configurations with different restrictions. For example, the Google Kubernetes Engine (GKE) has several limitations on GPU usage, such as not being possible to add GPUs to an existing node pool, no live-migrate GPU nodes during any maintenance event, and GPUs are only supported by specific machine types. These provides challenging rules for AI companies to follow when using GPUs on Kubernetes, and they might not be able to transfer to alternative cloud platforms easily due to complications in operational aspects.

One of the core business challenges AI companies face on multi-cloud system concerns with cost optimisation. However, the current relationship between cloud platforms and users is heavily unbalanced, with the platform side charging high egress fees for users who wish to move away from the platform and imposing long vendor lock-in periods to users.

AI companies seeking alternative cloud platforms to spread their workload or for other platform-specific benefits are hence often at short end of the bargain. For businesses, the ability to move their entire workload, networking template, and connections requires their setup to be cloud-agnostic, so that they can reduce the impact of vendor lock-ins and move between different cloud providers with ease.

With AI companies, the portability and scaling of their model is often an integral part of their business success. This calls for flexibility and scalability in their infrastructures, specifically in the GPU clusters. Although scalability is a key feature of Kubernetes and cloud-computing, in this case they are severely hindered by the current GPU-shortage. If a company is unable to scale on one cloud platform, they have to find solutions to distribute and manage their workloads across various cloud platforms, and as mentioned above, multi-cloud environment greatly exacerbates the complexity of Kubernetes.

Similarly, the need for portability calls for AI companies to turn to edge computing and hybrid cloud for reduced latency and be closer to data-source or end-users. When managed with Kubernetes, similar complexities as multi-cloud platform management arises.

These complexity will add to the company’s operational cost, affecting the proportion of cost to revenue, and ultimately the valuation of the company.

The complexity of Kubernetes on multi-cloud/multi-environment means that it is prone to mistakes if configured and managed manually. The consequences of mismanagement can be dire, valuable training data could be lost, precious time and resources wasted on GPU credits, and not to mention the potential backlash and regulatory actions from data breaches.

Another challenge for AI companies when using GPUs on Kubernetes is the regulatory risk. Governments have since adopted strict rules and high emphasis on information security. The storage and training of hyper-sensitive data is a dedicate and highly controversial topic. What happens if the most powerful GPU clusters are based outside the jurisdiction of a government, and there are clear regulations that such type of data should not leave the state boundary? In this case, AI companies should have control over the distribution of workload across its clusters and cloud platforms.

Over the years of development, cloud computing and GPUs come in a plethora of hardware configurations. AI companies looking to use GPUs on Kubernetes have to take into consideration the different GPU models and their compatibility with the system. More often than not, they face a complex computational environment with multiple servers running multiple generations of GPUs. To complicate the situation further, major cloud platforms are starting to design and build their own version of GPUs (e.g. Google’s Tensor Processing Units, TPU, that is unique to GCP) to be better integrated into their work flow and also to raise the wall between competitors.

GPUs and Kubernetes were not specifically built for each other. Traditionally, schedulers are used to distribute ML workloads across large number of GPUs to utilise resources effectively and automatically. However, such GPU schedulers are difficult to maintain and use, and were not designed for cloud-native environments.

Here enter Kubernetes, being built specifically for containerised and microservice architectures on the cloud, Kubernetes is not a native, dedicated scheduler, but instead is a container orchestrator. This, however, means added complexity of using plugins and libraries provided by some GPU and software vendors to add GPU scheduling to Kubernetes before being able to benefit from Kubernetes. When using GPU on Kubernetes, there are also limitations, such as cannot share GPUs across containers and pods (over-commitment), individual GPU can only be accessed as a whole or cluster, not as parts; and requests have to be equal to the limits of GPUs.

Adapting to Kubernetes has many inherent benefits to AI companies of today. First and foremost, Kubernetes help traditionally non-cloud native GPU clusters move online, empowering it with the ubiquity of cloud platforms. This in turn allows AI companies to better deploy their workloads and training models on cloud, reducing deployment cost and increase training efficiency.

However, as AI companies consider spreading their workloads across different cloud platforms, Kubernetes’ inherent complexities and challenges in managing workloads on multiple cloud platforms become more prominent. A new solution is to be devised to help AI companies thrive in a multi-cloud environment. Ori' s application centric networking capability introduces a standardised way to run applications consistently across any environments or clouds. It’s unique approach to application orchestration removes silos between development and operations and greatly improves application security and reliability.

For AI companies, Ori simplifies Kubernetes in multi-cloud, hybrid-cloud, and on the edge, bridging the gap between AI and infrastructure without compromising security.

“Kubernetes Architecture for AI Workloads”, Run:AI, Link

“Using AI to Optimize a Multi-cloud Strategy”, informa, 2023, Link

“Artificial Intelligence & Machine Learning Overview”, Pitchbook, 2023 Link

“Nvidia is not the only firm cashing in on the AI gold rush”, The Economist, 2023, Link

When should you opt for H100 GPUs over A100s for ML training and inference? Here's a top down view when considering cost, performance and use case.

Inside the NVIDIA H200: Specifications, use cases, performance benchmarks, and a comparison of H200 vs H100 GPUs.

Learn more about the NVIDIA L40S, a versatile GPU that is designed to power a wide variety of applications, and check out NVIDIA L40S vs NVIDIA H100...