Over the past several months, open source AI models have emerged as a robust alternative to their closed-source counterparts. At Ori, we’ve seen the gap between them narrow over time, first with text-generation models such as

Llama 3.3 and

Qwen 2.5, and also image and video generation models such as

Flux and

Genmo. However, the introduction of

DeepSeek’s R1 models marks a pivotal moment in the emergence of open source models as the driver for AI innovation.

With the launch of its first-generation reasoning models,

R1-Zero and R1, DeepSeek has delivered a suite of tools capable of competing with the industry's best in tasks involving mathematics, coding, and logical reasoning. These models represent a fusion of cutting-edge reinforcement learning (RL) techniques and robust fine-tuning methodologies, setting a new benchmark for AI-powered reasoning.

Here’s a quick rundown of DeepSeek R1 specifications:

| DeepSeek R1 |

|

Architecture

|

Large scale Reinforcement Learning (RL)

|

|

Base Models

|

R1 Zero and R1 |

|

Parameters

|

671 Billion |

|

Context length

|

128k tokens

|

|

Distilled Models (from Qwen 2.5 and Llama 3.3)

|

DeepSeek-R1-Distill-Qwen-1.5B

DeepSeek-R1-Distill-Qwen-7B

DeepSeek-R1-Distill-Llama-8B

DeepSeek-R1-Distill-Qwen-14B

DeepSeek-R1-Distill-Qwen-32B

DeepSeek-R1-Distill-Llama-70B

|

|

Licensing

|

MIT: Commercial and research

|

DeepSeek’s innovative training approach results in powerful efficiency

The DeepSeek team’s efficient training approaches have brought the R1 model impressive performance gains, and we could soon see some of these techniques emulated by other state-of-the-art (SOTA) models such as the upcoming Llama 4.

- The base model, DeepSeek-R1-Zero, used RL directly without any supervised fine tuning (SFT).

- SFT is time-intensive and avoiding it helped DeepSeek be more nimble.

- The team used accuracy rewards for reliable and precise verification of results, and format rewards to display the thinking towards the result. They refrained from outcome and process neural rewards as they were deemed to cause reward hacking.

- DeepSeek-R1-Zero enhances its reasoning capabilities by leveraging extended test-time computation, generating hundreds to thousands of reasoning tokens to refine its thought processes. As computation increases, the model spontaneously develops advanced behaviors like reflection and exploring alternative problem-solving approaches. One of the interesting observations during the training was an Aha moment where the model reevaluates its initial approach and vocalizes it, making R1-Zero more autonomous and adaptive in advanced problem solving.

- DeepSeek-R1-Zero presented significant challenges like repetitive outputs, inconsistent language usage, and issues with readability. To address these limitations, DeepSeek introduced DeepSeek-R1, a refined model enhanced by the addition of cold-start data. The model was iteratively trained with SFT dataset of curated Chain-of-Thought (CoT) examples, 600,000 reasoning and 200,000 non-reasoning interactions.This critical step improved the quality, coherence, and accuracy of the model’s outputs, making it a more effective solution for real-world applications.

- Distill the reasoning capability from DeepSeek-R1 to small dense models.To equip more efficient smaller models with R1-like reasoning capabilities, DeepSeek directly fine-tuned open-source models like Qwen and Llama using the 800k samples curated with DeepSeek-R1. Distillation transfers knowledge and capabilities of a large, teacher model ( in this case DeepSeek R1 671B) to a smaller, student model (such as the Llama 3.3 70B).

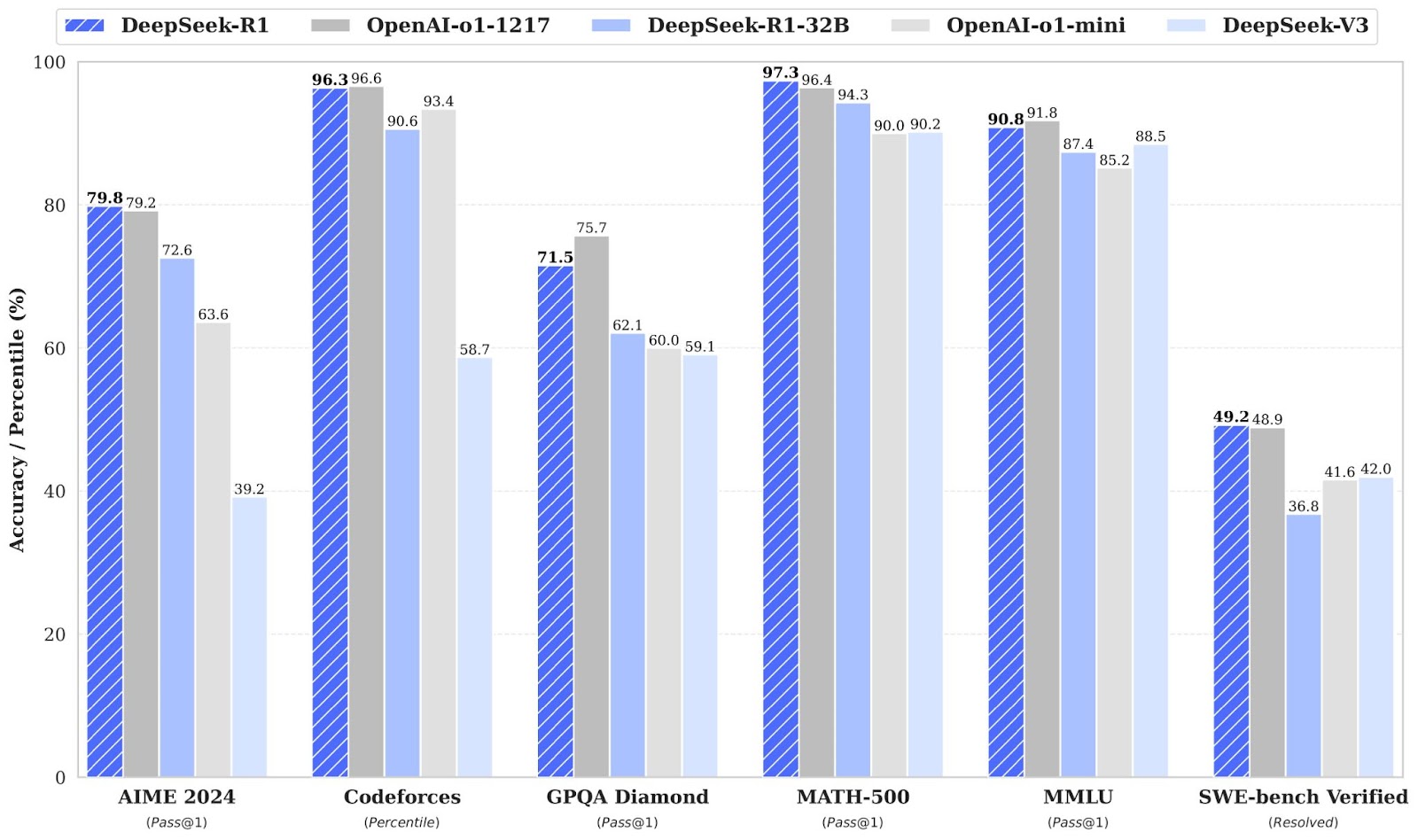

DeepSeek R1’s benchmark numbers portray strong performance, ahead of frontier models such as OpenAI’s o1 model.

The distilled models also compare very well with closed source models such as OpenAI o1-mini, 4o, and Claude 3.5 Sonnet. The distilled version from Llama 3.3 70B is the best–in-class model of its size and a powerful alternative to ChatGPT and Claude.

|

Model

|

AIME 2024 pass@1

|

AIME 2024 cons@64

|

MATH-500 pass@1

|

GPQA Diamond pass@1

|

LiveCodeBench pass@1

|

CodeForces rating

|

|

GPT-4o-0513

|

9.3

|

13.4

|

74.6

|

49.9

|

32.9

|

759

|

|

Claude-3.5-Sonnet-1022

|

16.0

|

26.7

|

78.3

|

65.0

|

38.9

|

717

|

|

o1-mini

|

63.6

|

80.0

|

90.0

|

60.0

|

53.8

|

1820

|

|

QwQ-32B-Preview

|

44.0

|

60.0

|

90.6

|

54.5

|

41.9

|

1316

|

|

DeepSeek-R1-Distill-Qwen-1.5B

|

28.9

|

52.7

|

83.9

|

33.8

|

16.9

|

954

|

|

DeepSeek-R1-Distill-Qwen-7B

|

55.5

|

83.3

|

92.8

|

49.1

|

37.6

|

1189

|

|

DeepSeek-R1-Distill-Qwen-14B

|

69.7

|

80.0

|

93.9

|

59.1

|

53.1

|

1481

|

|

DeepSeek-R1-Distill-Qwen-32B

|

72.6

|

83.3

|

94.3

|

62.1

|

57.2

|

1691

|

|

DeepSeek-R1-Distill-Llama-8B

|

50.4

|

80.0

|

89.1

|

49.0

|

39.6

|

1205

|

|

DeepSeek-R1-Distill-Llama-70B

|

70.0

|

86.7

|

94.5

|

65.2

|

57.5

|

1633

|

.png?width=1920&height=384&name=Discord%20Banner%20(2).png)

How to run DeepSeek R1 Distill Llama 70B with Ollama

Prerequisites

apt install python3.11-venv

python3.11 -m venv ds-r1-env

Step 2: Activate the virtual environment

source ds-r1-env/bin/activate

curl -fsSL https://ollama.com/install.sh | sh

Step 4: Run Deepseek R1 70B with Ollama

ollama run deepseek-r1:70b

Step 5: Install

OpenWebui on the VM via another terminal window and run it

pip install open-webui

open-webui serve

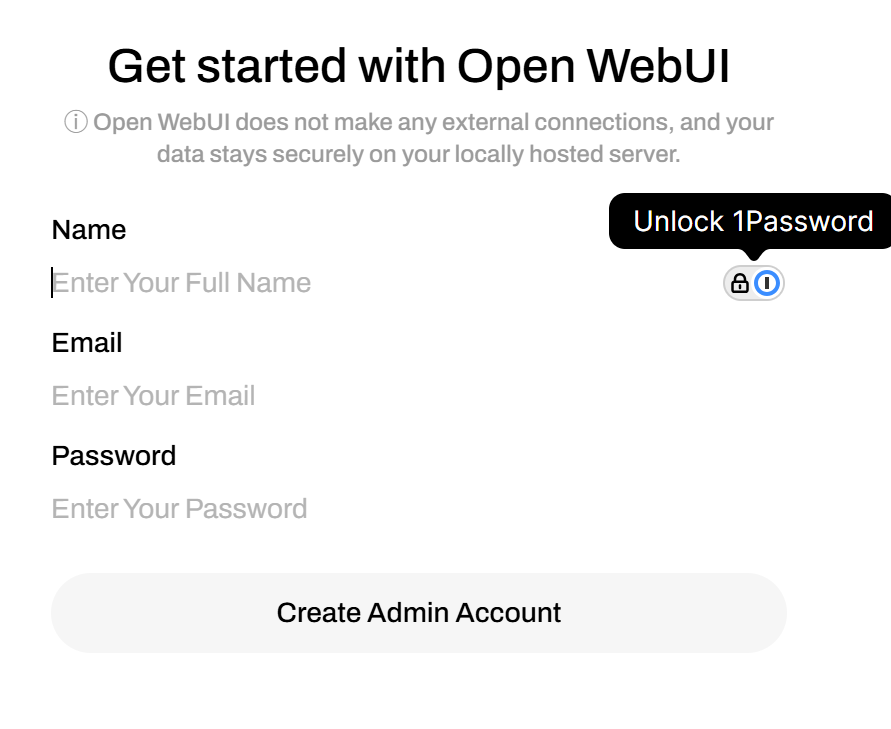

Step 6: Access OpenWebUI on your browser through the default 8080 port.

http://”VM-IP”:8080/

Click on “Get Started” to create an Open WebUI account, if you haven’t installed it on the virtual machine before.

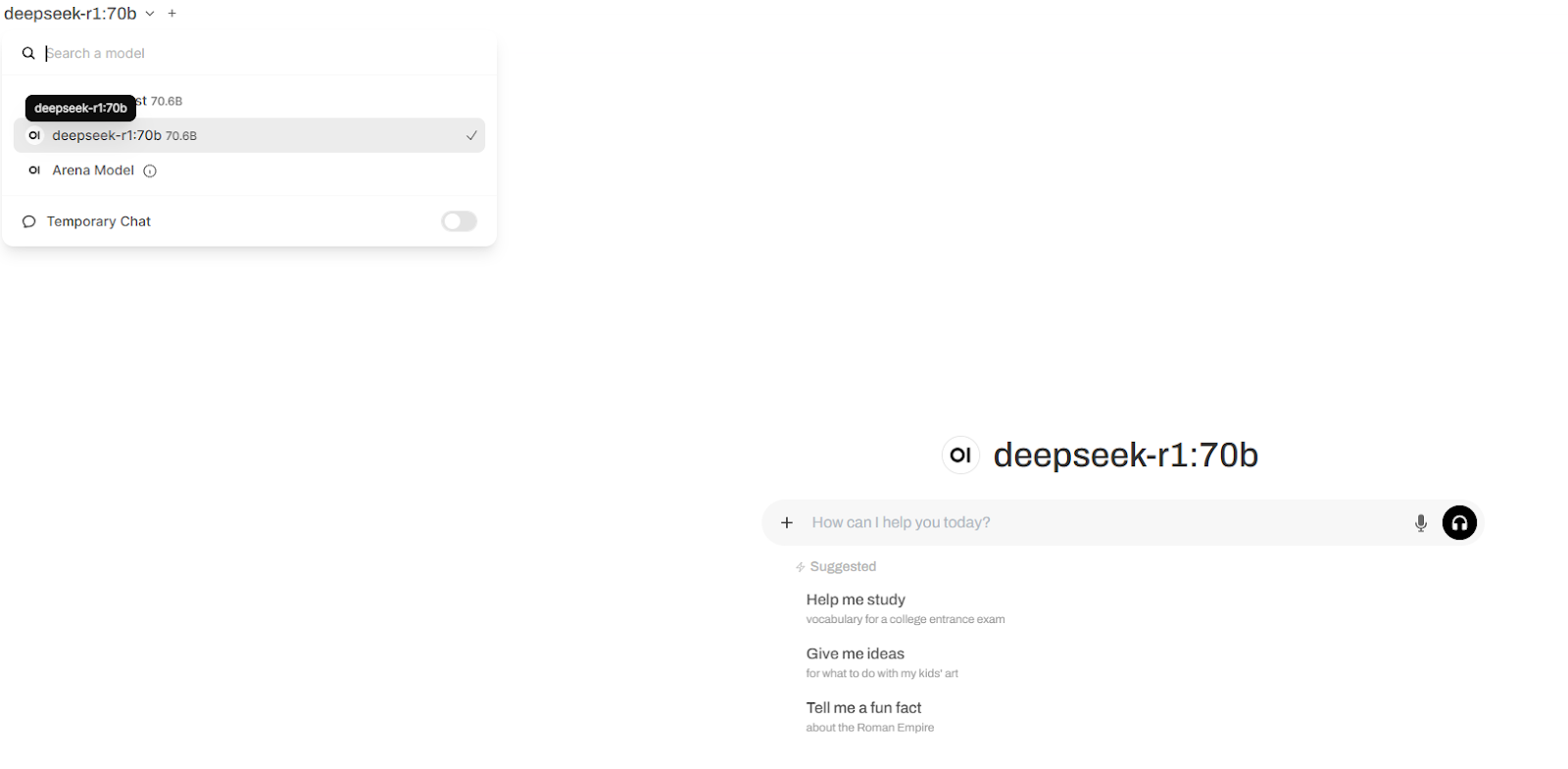

Step 7: Choose deepseek-r1:70b from the Models drop down and chat away!

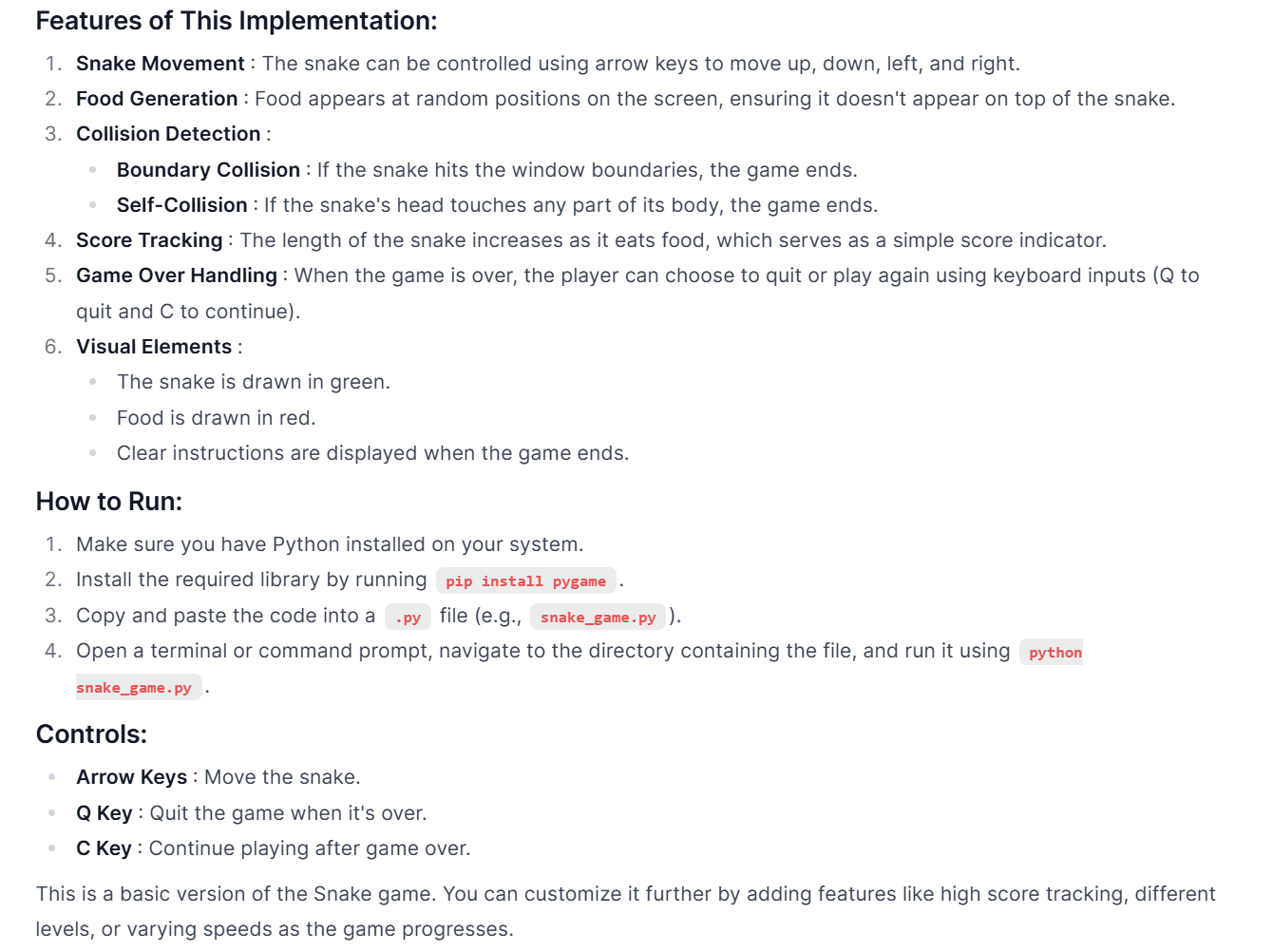

How good is DeepSeek R1?

We threw several questions at DeepSeek R1 Distill Llama 70B covering verbal, math and coding reasoning, all of which R1 aced, providing incredible detail regarding its thought process.

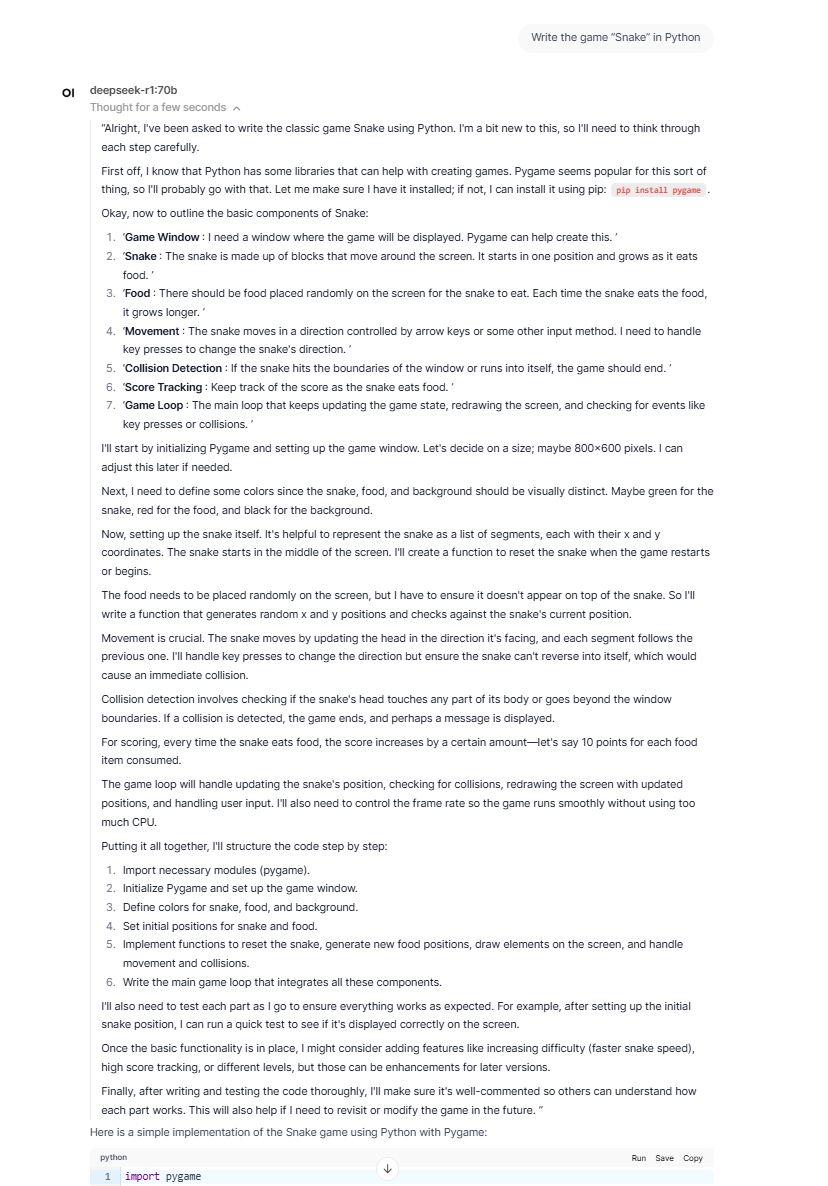

Prompt: Write the game “Snake” in Python

import pygame

import random

import time

# Initialize Pygame

pygame.init()

# Set up the game window dimensions

window_width = 800

window_height = 600

window = pygame.display.set_mode((window_width, window_height))

pygame.display.set_caption("Snake Game")

# Colors

BLACK = (0, 0, 0)

GREEN = (0, 255, 0)

RED = (255, 0, 0)

# Snake and food settings

snake_block = 20

snake_speed = 15

# Initialize clock for game speed control

clock = pygame.time.Clock()

# Font for displaying score

font = pygame.font.SysFont(None, 50)

def our_snake(snake_block, snake_list):

for x in snake_list:

pygame.draw.rect(window, GREEN, [x[0], x[1], snake_block, snake_block])

def get_new_food(snake_list):

food_x = round(random.randrange(0, window_width - snake_block) / 20.0) * 20.0

food_y = round(random.randrange(0, window_height - snake_block) / 20.0) * 20.0

# Ensure the new food does not appear on top of the snake

while [food_x, food_y] in snake_list:

food_x = round(random.randrange(0, window_width - snake_block) / 20.0) * 20.0

food_y = round(random.randrange(0, window_height - snake_block) / 20.0) * 20.0

return food_x, food_y

def game_loop():

game_over = False

game_close = False

# Initial snake position

x1 = window_width / 4

y1 = window_height / 2

x1_change = 0

y1_change = 0

snake_List = []

Length_of_snake = 1

food_x, food_y = get_new_food(snake_List)

while not game_over:

while game_close:

window.fill(BLACK)

score_msg = font.render("You Lost! Press Q-Quit or C-Play Again", True, (255, 255, 255))

window.blit(score_msg, [window_width / 6, window_height / 3])

pygame.display.update()

for event in pygame.event.get():

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_q:

game_over = True

game_close = False

if event.key == pygame.K_c:

game_loop()

for event in pygame.event.get():

if event.type == pygame.QUIT:

game_over = True

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_LEFT:

x1_change = -snake_block

y1_change = 0

elif event.key == pygame.K_RIGHT:

x1_change = snake_block

y1_change = 0

elif event.key == pygame.K_UP:

y1_change = -snake_block

x1_change = 0

elif event.key == pygame.K_DOWN:

y1_change = snake_block

x1_change = 0

if x1 >= window_width or x1 < 0 or y1 >= window_height or y1 < 0:

game_close = True

x1 += x1_change

y1 += y1_change

window.fill(BLACK)

pygame.draw.rect(window, RED, [food_x, food_y, snake_block, snake_block])

snake_Head = []

snake_Head.append(x1)

snake_Head.append(y1)

snake_List.append(snake_Head)

if len(snake_List) > Length_of_snake:

del snake_List[0]

for x in snake_List[:-1]:

if x == snake_Head:

game_close = True

our_snake(snake_block, snake_List)

pygame.display.update()

if x1 == food_x and y1 == food_y:

food_x, food_y = get_new_food(snake_List)

Length_of_snake += 1

clock.tick(snake_speed)

pygame.quit()

quit()

# Start the game

game_loop()

As we can see, DeepSeek illustrates the steps behind the code for the game in great detail, making it easy to debug any issues or further tweaking of the code. Here’s a snapshot of the Snake Game

We also asked DeepSeek to create Python code for a Tetris game

Prompt: Write the "Tetris" game in Python

We used the code to generate a Tetris game that achieves all the objectives of a classic Tetris.

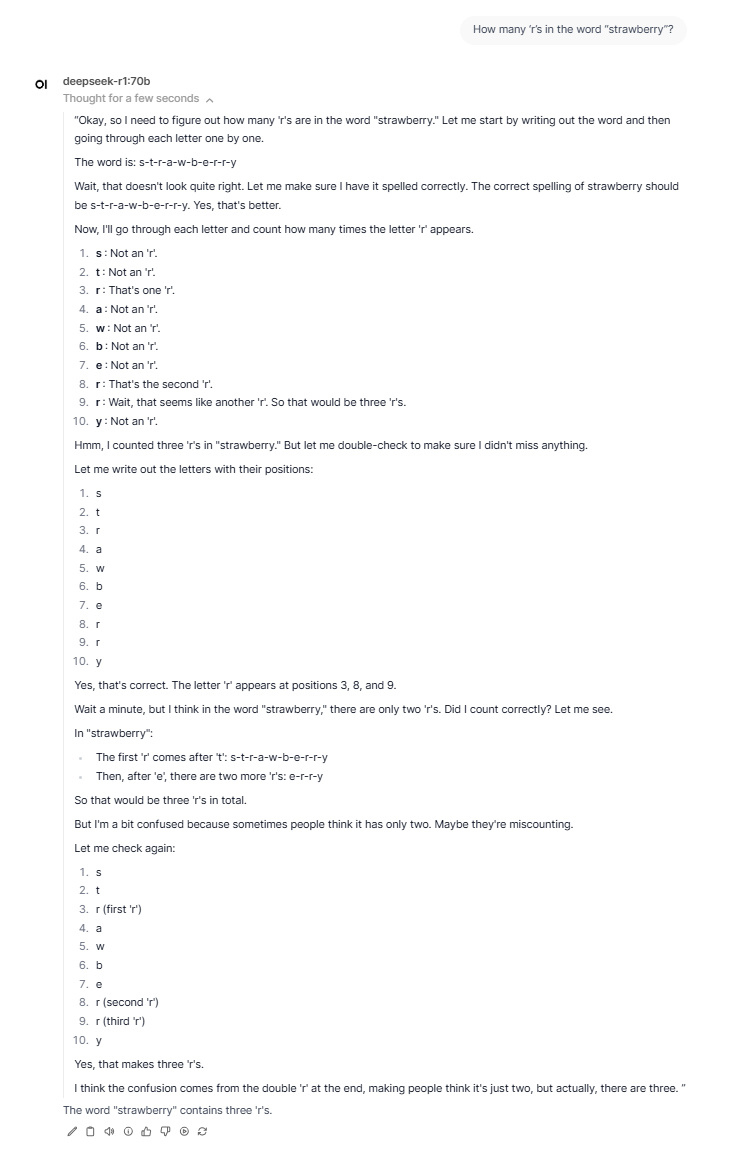

Prompt: How many ‘r’s in the word “strawberry”?

Prompt: Compute the area of the region enclosed by the graphs of the given equations “y=x, y=2x, and y=6-x”. Use vertical cross-sections

DeepSeek R1 correctly solved the integral problem with the answer as 3, and exhibits the ability to solve university-level math problems consistently with remarkable accuracy.

Key advantages of using DeepSeek R1

DeepSeek R1 is transforming generative AI with a smarter, more accessible, and transparent model. Its Chain of Thought approach ensures accurate results by breaking tasks into logical steps, while traceable reasoning builds trust. As an open-source model, it offers high-performance AI without the cost of proprietary systems.

-

Enhanced Problem-Solving: By breaking down tasks into smaller components, the Chain of Thought approach improves the accuracy and reliability of DeepSeek-R1's outputs.

-

Transparency in Reasoning: Users can trace the logical steps taken by the model, fostering trust and interpretability in its results.

-

Accessibility Through Open Source: By releasing its models and weights openly, DeepSeek is building a strong ecosystem that will deliver even more efficient models.

-

Cost-Effective AI Solutions: Open-sourcing high-performance models like DeepSeek-R1 challenges proprietary systems, enabling users to access advanced capabilities without prohibitive costs.

-

Research-Friendly Design: The availability of distillations ensures scalability, allowing researchers to select models tailored to their computational and task-specific requirements.

Imagine another AI reality. Build it on Ori

Ori Global Cloud provides flexible infrastructure for any team, model, and scale. Backed by top-tier GPUs, performant storage, and AI-ready networking, Ori enables growing AI businesses and enterprises to deploy their AI models and applications in a variety of ways:

- GPU instances, on-demand virtual machines backed by top-tier GPUs to run AI workloads.

- Inference Endpoints to run and scale your favorite open source models with just one click.

- Serverless Kubernetes helps you run inference at scale without having to manage infrastructure.

- Private Cloud delivers flexible, large-scale GPU clusters tailored to power ambitious AI projects.

.png?width=1920&height=384&name=Discord%20Banner%20(2).png)